Unpaired image-to-image translation is a class of vision problems whose goal is to find the mapping between different image domains using unpaired training data. Cycle-consistency loss is a widely used constraint for such problems. However, due to the strict pixel-level constraint, it cannot perform shape changes, remove large objects, or ignore irrelevant texture. In this paper, we propose a novel adversarial-consistency loss for image-to-image translation. This loss does not require the translated image to be translated back to be a specific source image but can encourage the translated images to retain important features of the source images and overcome the drawbacks of cycle-consistency loss noted above. Our method achieves state-of-the-art results on three challenging tasks: glasses removal, male-to-female translation, and selfie-to-anime translation.

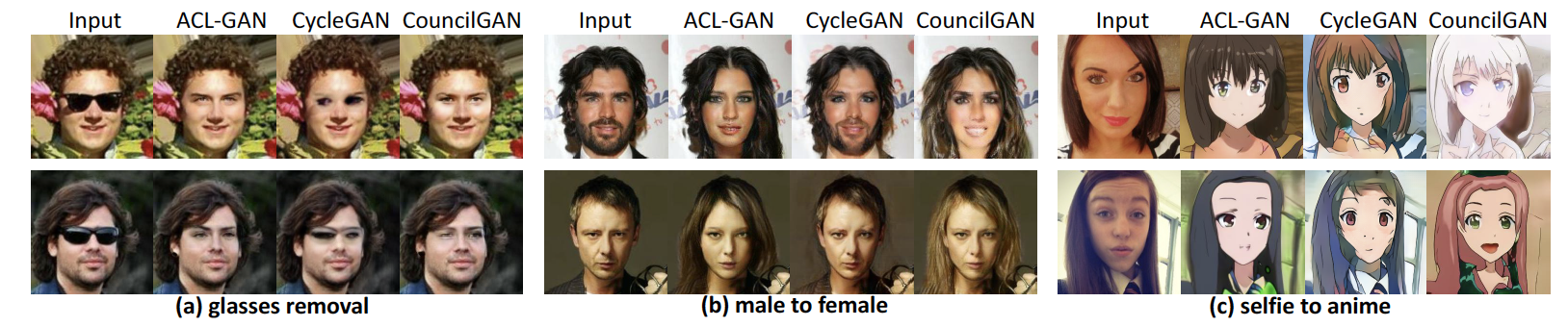

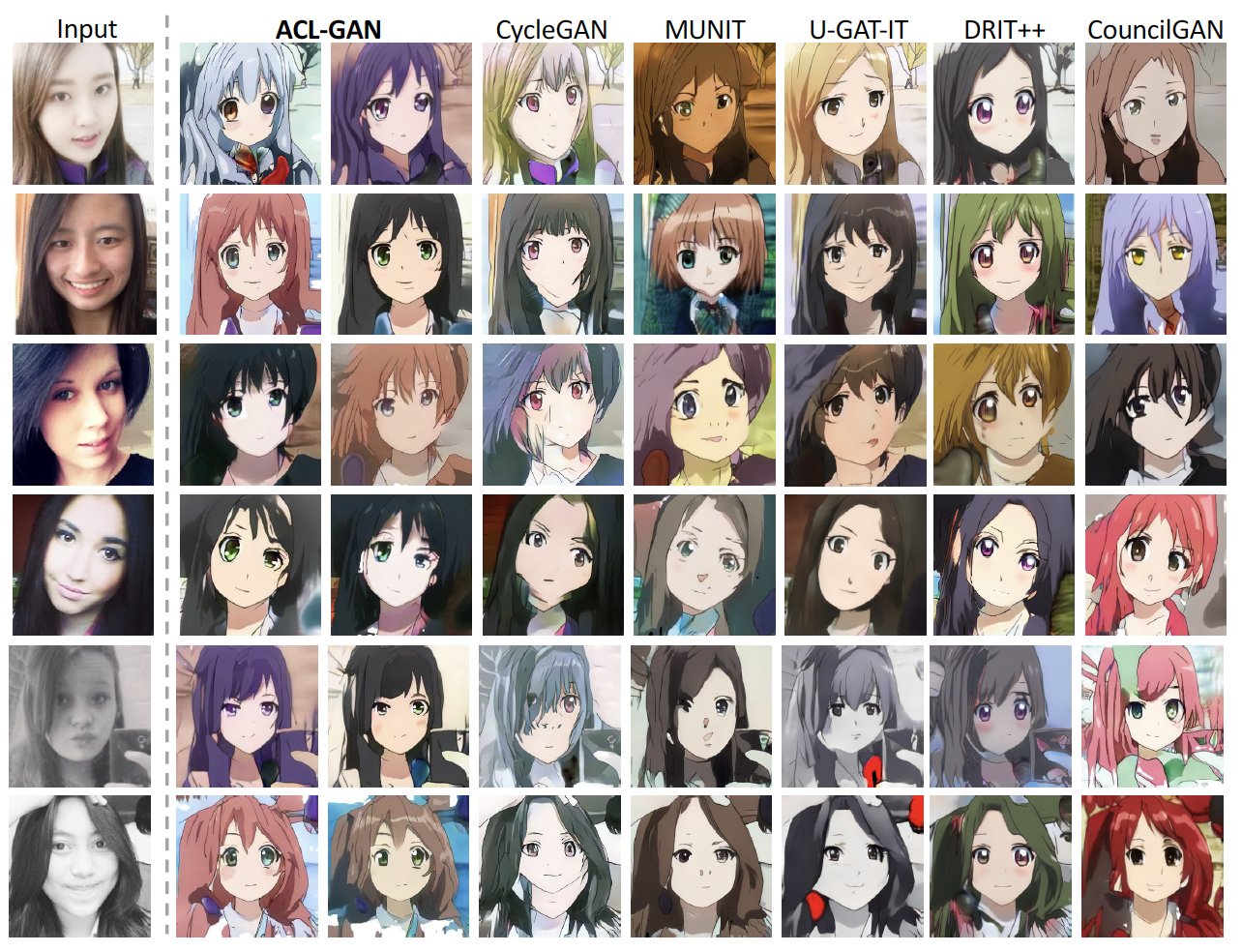

Figure 1. Example results of our ACL-GAN and baselines. Our method does not require cycle consistency, so it can bypass unnecessary features. Moreover, with the proposed adversarial-consistency loss, our method can explicitly encourage the generator to maintain the commonalities between the source and target domains. |

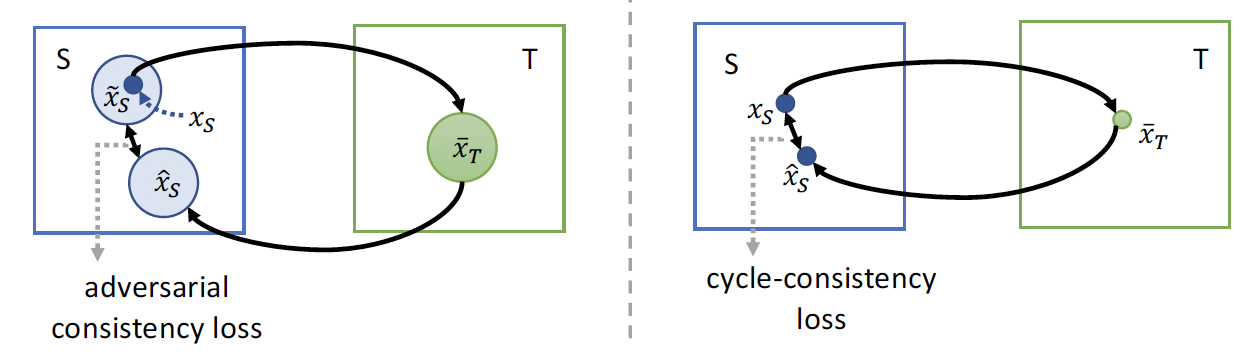

Figure 2. The comparison of adversarial-consistency loss and cycle-consistency loss. The blue and green rectangles represent image domains S and T, respectively. Any point inside a rectangle represents a specific image in that domain. |

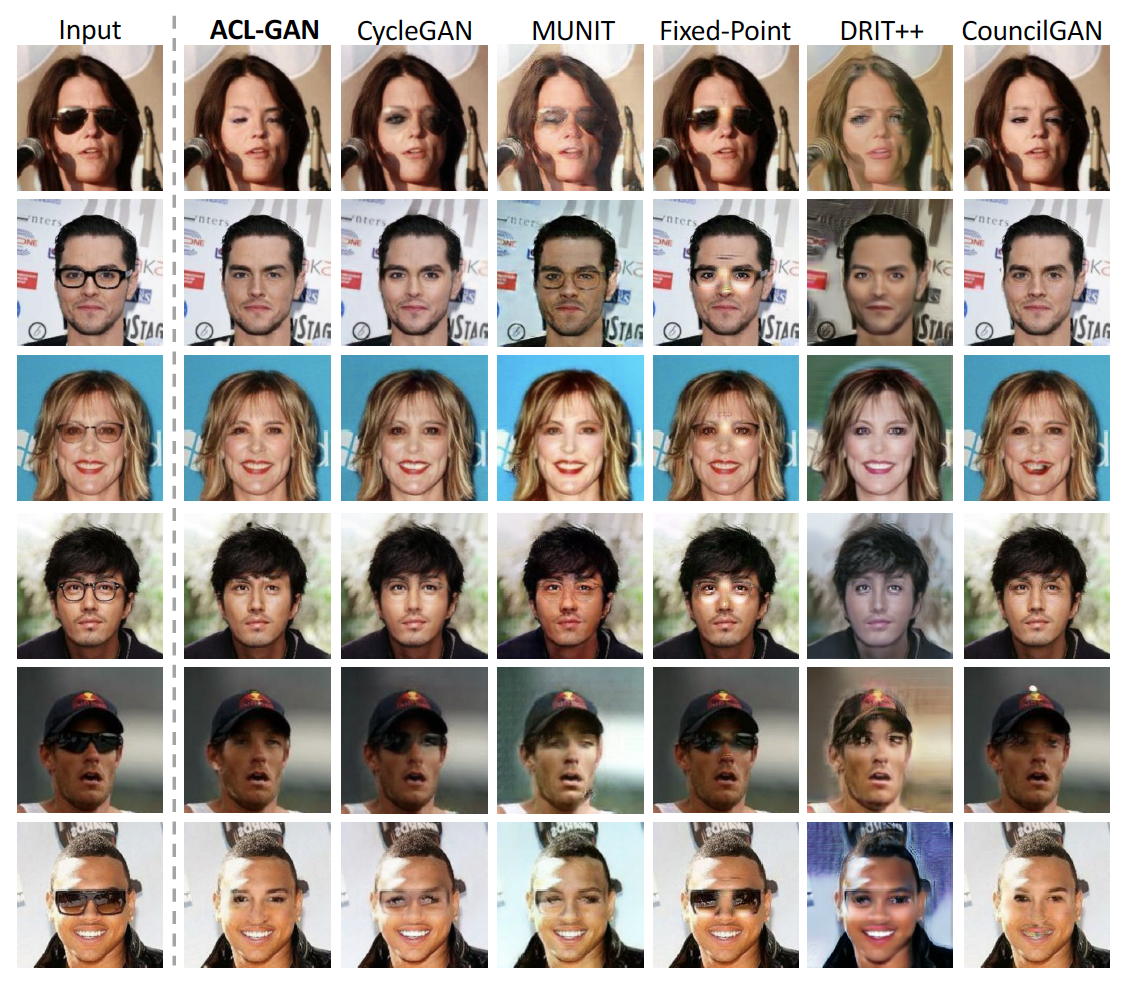

Figure 3. Comparison against baselines on glasses removal. |

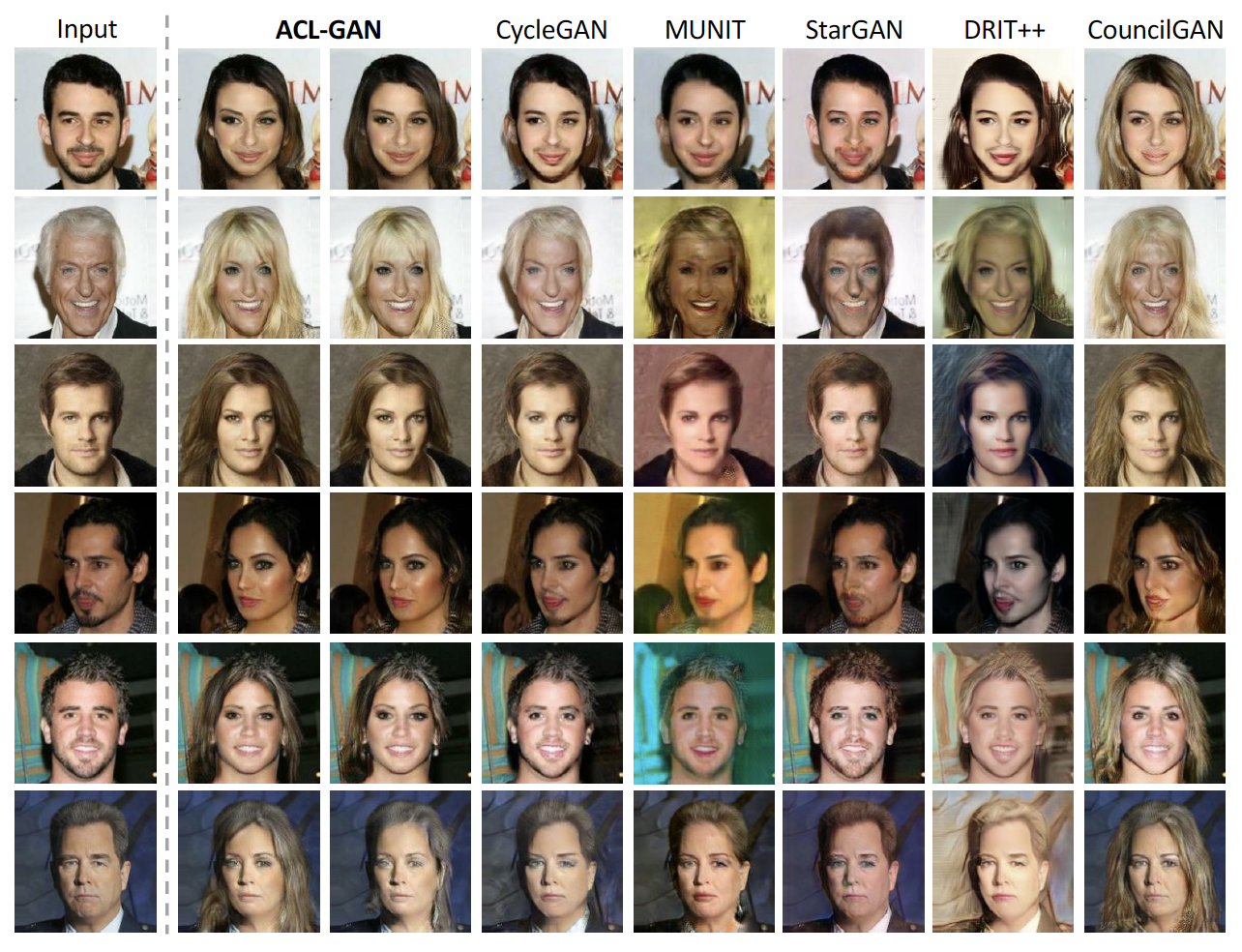

Figure 4. Comparison against baselines on male-to-female translation. |

Figure 5. Comparison against baselines on selfie-to-anime translation. |

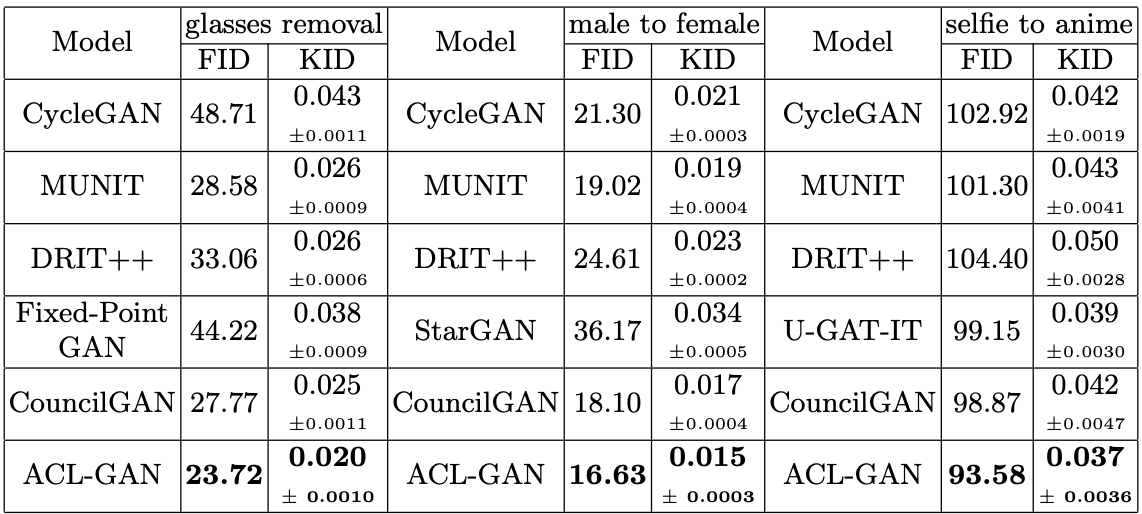

Figure 6. Quantitative Comparisons to Baseline Methods. We show quantitative comparisons between our algorithm and the baseline methods. |

This work was supported by the funding for building AI super-computer prototype from Peng Cheng Laboratory (8201701524), start-up research funds from Peking University (7100602564) and the Center on Frontiers of Computing Studies (7100602567). We would also like to thank Imperial Institute of Advanced Technology for GPU supports.